Author: Zhang Dejun, security expert at iFLYTEK

background

Static Application Security Testing (SAST) products can help enterprises automatically discover security vulnerabilities and risks in application code during the application development process, playing an important role in the application security of enterprises.

The company I work for is planning to purchase a SAST commercial product in the near future. However, there are dozens of similar products in the industry, and there are many evaluation dimensions. Putting aside business factors, considering that the ability to detect security vulnerabilities and risks is the core capability of a SAST product, we hope to choose a product with the strongest detection capability.

Problems encountered

We first selected three products as our candidates, namely: a well-known foreign SAST product A, a well-known foreign SAST product B, and a domestic SAST product C.

We investigated the current evaluation methods of application security testing products in the industry, which mainly rely on some public test sample sets, such as webgoat, SecuriBench, Owasp Benchmark, and Juliet Benchmark. After further analysis, we found that these test sets have the following problems:

(1) The completeness of the test set cannot be guaranteed. We initially tried to test on the Owasp Benchmark, which has more than 2,000 samples. The test results showed that the recall rate of these three SAST products was 100%. This result made it impossible for us to make a distinction and was obviously inconsistent with the actual situation. It also shows that the completeness of these test sets cannot be guaranteed.

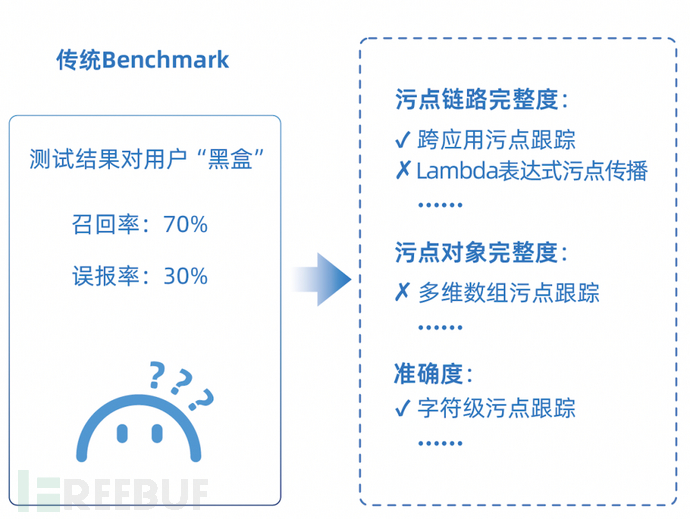

(2) The test results are not interpretable and are a “black box” to users. The test results obtained based on the existing test sample sets can generally only provide data on recall rate and false alarm rate, but we hope to see the differences in the “origin” of product detection capabilities so that we can have a clear idea of the situation.

(3) The existing test sample sets have not been updated since 2016, but SAST technology is constantly developing. Using these “antiques” to evaluate the latest SAST products will make it difficult for the test results to objectively reflect reality.

Faced with these three SAST products, how to select the one with the strongest detection capability becomes a challenge we face.

Problem Solving

Just when we were confused about this, we noticed that the Ant Group Security Team and the School of Cyberspace Security of Zhejiang University jointly built and open-sourced an xAST evaluation system project in 2023.【1】The project aims to evaluate the security detection capabilities of various application security testing products such as SAST/IAST/DAST.

Compared with traditional test sample sets, the xAST evaluation system impressed us the most in two aspects:

(1) Traditional test sample sets are designed from a vulnerability perspective. Different types of products (such as SAST/IAST/DAST) share the same test sample set, but the principles of application security testing technology vary. The same sample set cannot be applied to different types of products. The xAST evaluation system is the first in the industry to be oriented toward the tool perspective. It designs evaluation dimensions and evaluation items for different types of tools, and then designs test samples based on the evaluation items.

From the tool perspective, the test sample set designed based on the evaluation items will be more systematic and targeted, with more even distribution of samples, making the evaluation results no longer “black box”. Users can map the actual test results of the tool on each sample to the corresponding evaluation items and obtain a “physical examination report”-style test result, as shown in the figure below.

Comparison of evaluation results between traditional Benchmark and xAST evaluation system【2】

The evaluation dimensions of the xAST evaluation system include completeness, accuracy, compatibility, performance, access cost, etc. Each evaluation dimension can be further divided into several evaluation items. Each evaluation item corresponds to a test sample. For details, please refer to【1】。

(2) The xAST evaluation system is based on the product detection principle and is the first in the industry to design a layered evaluation system, including engine capabilities, rule capabilities, and product capabilities. The evaluation system at the engine capability level has been open sourced.

In essence, the capabilities of application security testing products are layered. Some capabilities are at a lower level, such as the ability to track tainted data. Such capabilities are usually difficult to implement and costly, and require users to focus on them. Some capabilities are at a higher level, such as support for a certain sink point, which can be implemented through simple configuration. Traditional vulnerability sample sets do not distinguish between these capabilities, and the test results cannot distinguish whether the problem is caused by the lack of rule configuration or the lack of support in the engine capabilities.

We also contacted Ant's security experts in charge of the xAST evaluation system project through the open source community and learned that since the project was open sourced in 2023, more than 20 corporate users including Alibaba and Huawei have used it for various commercial procurements and selection of open source application security testing products, and it is used as an evaluation standard for the Open Atom Open Source Foundation to evaluate open source security tools.

Based on the above reasons, we decided to adopt the xAST evaluation system to evaluate the detection capabilities of these three SAST products in this product selection.

Evaluation Results

Based on the xAST evaluation system, we tested the detection capabilities of these three SAST products on the Java language. This test mainly focused on the two dimensions of detection completeness and accuracy. The test results are shown in the following table.

| product | Completion | Accuracy |

| A foreign SAST product | 84% | 44% |

| A foreign SAST product B | 76% | 77% |

| A domestic SAST product C | 98% | 77% |

In addition, we mapped the test results with the evaluation items of the xAST evaluation system and obtained the following more detailed test results, which can provide a more fine-grained understanding of the differences in the detection capabilities of the three products.

In terms of detection completeness, the evaluation system is mainly divided into two aspects: the completeness of tainted object tracking and the completeness of tainted link tracking. The completeness of tainted object tracking mainly examines whether SAST can support taint tracking for various objects or basic data types; the completeness of tainted link tracking mainly examines whether SAST can support various tainted link propagation scenarios.

In terms of detection accuracy, the evaluation system is mainly divided into five dimensions: taint tracking flow sensitivity, object sensitivity, domain sensitivity, path sensitivity and context sensitivity.

SAST Accuracy Evaluation Item Description Table

| Accuracy evaluation items | Evaluation item description |

| Flow Sensitivity | The execution order of the program can be analyzed |

| Object Sensitive | Supports analysis of objects with the same name in different paths, as well as the state and scope of objects |

| Domain Sensitive | Supports analysis of fields or elements on data types such as objects and containers |

| Path Sensitive | Supports analysis of branch paths |

| Context Sensitive | Combined with the context information of the function call, it supports the analysis of different execution paths of a function when the actual parameters or global variables are different. |

Comparison table of the Java language detection capability evaluation results of three SAST products (partial)

| Evaluation Dimensions | Evaluation Category | Evaluation items | A foreign product | A foreign product B | A domestic product C |

| Completion | Tainted Object Tracking Integrity | Array Object Tracking | Checkout | Checkout | Checkout |

| Integer tracking | Checkout | not detected | Checkout | ||

| Char tracking | Checkout | Checkout | Checkout | ||

| Tracking elements in an array | Checkout | not detected | Checkout | ||

| … | … | … | … | ||

| Tainted Link Tracking Integrity | Nested function call propagation | not detected | Checkout | Checkout | |

| Reflection call propagation | not detected | not detected | not detected | ||

| this call propagation | Checkout | not detected | Checkout | ||

| Lambda expression propagation | Checkout | not detected | Checkout | ||

| native method propagation | Checkout | not detected | Checkout | ||

| Ternary operator propagation | not detected | not detected | Checkout | ||

| Type conversion propagation | not detected | not detected | Checkout | ||

| ….. | … | … | … | ||

| Accuracy | Taint Tracking Flow Sensitivity | Taints are assigned hardcode values | False Positives | False Positives | Accurate identification |

| Taint tracking object sensitivity | Object confusion with the same name | False Positives | Accurate identification | Accurate identification | |

| Taint Tracking Domain Sensitive | Some elements in the map are tainted | False Positives | Accurate identification | Accurate identification | |

| Some elements in the queue are tainted | False Positives | False Positives | False Positives | ||

| Some characters in the string are tainted | False Positives | False Positives | False Positives | ||

| … | … | … | … | ||

| Taint tracking path sensitivity | …… | … | … | … | |

| Taint tracking context-sensitive | …… | … | … | … |

Through detailed evaluation results, we can draw the following conclusions:

(1) In terms of detection completeness, a domestic SAST product C has the strongest comprehensive capability, followed by a foreign SAST product A, while a foreign SAST product B has relatively weak capabilities.

-

A domestic SAST product C supports most of the tainted object tracking scenarios and tainted link propagation scenarios, but does not support detection in the reflection call taint propagation scenario.

-

A foreign SAST product A also supports the tracking of most tainted objects; in the taint link tracking scenario, it has good support for more common scenarios, but does not support 18 taint propagation scenarios, including nested function calls, multiple syntax sugar combinations (such as this call + lambda expression), ternary operators, and forced type conversions.

-

A foreign SAST product B fails to support 5 types of tainted object tracking; in the taint link tracking scenario, it does not support 23 taint propagation scenarios, including Lambda expressions, native methods, parameter passing of specific array elements, and this calls.

(2) In terms of detection accuracy, a domestic SAST product C has the strongest comprehensive capability, followed by a foreign SAST product B, while a foreign SAST product A has relatively weaker capabilities.

-

A domestic SAST product C has good support for both flow sensitivity and object sensitivity; in terms of domain sensitivity, there are false positives in some scenarios, such as scenarios where some elements in the Queue object are tainted.

-

A foreign SAST product B has good support for object-sensitive scenarios, but insufficient support for flow-sensitive and some domain-sensitive scenarios, such as scenarios where tainted objects are reassigned to safe hard-coded values (hardcode) and some elements in Queue objects are tainted.

-

A foreign SAST product A has insufficient capabilities in these scenarios and suffers from serious false alarms.

-

None of the three SASTs fully supports character-level taint tracking (i.e., the propagation scenario where only some characters in a string are tainted). This is due to the principles of SAST products and is a scenario that is more difficult for SAST to detect.

Summarize

Through this evaluation, we found that the detection capability of a domestic SAST product C exceeded our expectations. Its detection capability on the Java language has surpassed that of similar first-class foreign products, and its overall performance is the best.

In addition, through the xAST evaluation system open source project, it is indeed possible to show the detection capabilities of application security testing products in a more complete and fine-grained manner, making these application security testing products no longer “black boxes” for users. Currently, the xAST evaluation system only supports Java and Node languages in the SAST field. We also look forward to supporting more languages, more comprehensively reflecting the detection capabilities of SAST products, and meeting the evaluation needs of more users.

【1】xAST evaluation system community homepage: https://xastbenchmark.github.io

【2】xAST evaluation system Github: https://github.com/alipay/ant-application-security-testing-benchmark

GIPHY App Key not set. Please check settings