Did it work? Probably –

It shares some features with quantum annealers, but it’s easier to build and operate.

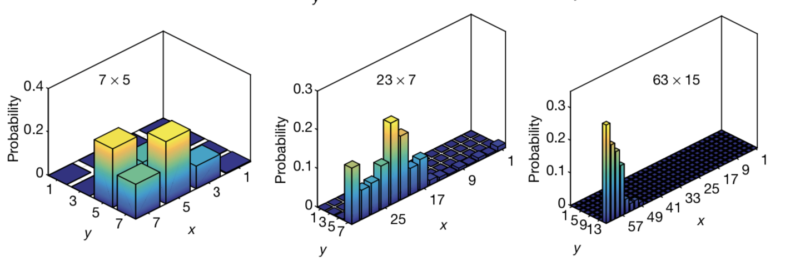

Enlarge/The correct answer is a matter of probabilities, so some related wrong answers also appear with some frequency.

The phenomenal success of our integrated circuits managed to obscure an awkward fact: they’re not always the best way to solve problems. The features of modern computers — binary operations, separated processing and memory, and so on — are extremely good at solving a huge range of computational problems. But there are things they’re quite bad at, including factoring large numbers, optimizing complex sets of choices, and running neural networks.

Even before the performance gains of current processors had leveled off, people were considering alternative approaches to computing that are better for some specialized tasks. For example, quantum computers couldoffer dramatic speed-upsin applications like factoring numbers and database searches. D-Wave’sQuantum Optimizerhandles (wait for it) optimization problems well. And neural network computing has been done with everythingfrom lightto a specialized form of memory (called a memristor) .

But the list of alternative computing architectures that have been proposed is actually larger than the list of things that have actually been implemented in functional form. Now, a team of Japanese and American researchers have added an additional entry to the “functional” category: probabilistic computing. Their hardware is somewhere in between a neural network computer and a quantum optimizer, but they’ve shown it can factor integers using commercial-grade parts at room temperature.

Probably magnets

So what is a probabilistic computer? Pretty much just what it sounds like. Instead of taking on a definitive value, its individual units — here termed “p-bits” —are only likely to adopt a specific value. If you tracked their behavior over time, they’d flip values at random moments, but these computers can end up spending more of their time in one of two possible values. To perform computations, a system would have to ensure that the p-bit spends more of its time in the state that reflect the appropriate value.

To implement this idea in hardware, the researchers took an experimental memory technology and made it worse. The technology is called a magnetic tunnel junction, and its primary components are two magnets sandwiching a layer of insulator. Electrons can tunnel through the insulator, creating a current through the device. That tunneling, however, is far more efficient when the orientation of the two magnets is aligned, creating an “on” state, or binary 1. Mess up the alignment enough and the current drops dramatically, creating an off state, or binary 0. Since we can control the orientation of the two magnetic layers, we can switch the device on and off as needed.

To actually use this as a form of memory, the setup has been manufactured so the magnets’ orientation remains stable for years, even with any current shutoff. But it’s also possible to structure these devices so that they’re awful as memory, as the magnets will change state within a few milliseconds. This leaves the device fluctuating between on and off, a feature that led other researchers to test a version as a hardware-based random number generator.

But these same features also mean it should function as a p-bit, which raises the issue of how to turn this idea into a probabilistic computer.

To get that to work, the authors hooked a small collection of these bits up to a more traditional controller chip. The controller performs three critical functions. To begin with, it translates the calculation that needs to be performed into a series of inputs sent to the p-bits. Next, it samples the outputs of all the p-bits and uses that as a feedback to adjust the behavior of each individual p-bit (in this sense, it’s a bit like a hardware-based neural network). During this time, the probabilistic nature means that the collection of p-bits will sample a large portion of the solution space of the problem, similar to a quantum computer — although the latter exists in a state that includes all potential solutions simultaneously.

After enough rounds of feedback, the controller then starts to record the output states. Since the probabilistic nature means that some of the p-bits will sometimes be in the wrong state, it takes multiple samplings to understand the probabilities and thus the answer to the problem.

It works (slowly)

To put this all into practice, the researchers constructed an eight p-bit computer and developed a number-factoring algorithm to run on it. Given the algorithm, eight p-bits is enough to handle numbers up to about 950, so your passwords are all safe for now. But it worked. While a typical sample of the device’s state included some wrong answers, over time, the correct answers stood out from the random noise of the device.

But the sampling required to get there is the big issue: it took 15 seconds. Obviously, existing computers can factor these numbers in a tiny fraction of that. Why would anyone bother with this?

The issue is one of scaling. We already know how to make these p-bits in vast quantities, and they can be connected with a simple bit of wiring. Solving a simple problem like this in 15 seconds looks bad in comparison to normal computers. But there are problems this could solve that a classical computer simply couldn’t before suffering a hardware failure. As long as the time needed to sample the solution space doesn’t scale as fast as the complexity of the problem, those problems could be solved in a useful amount of time.

That, of course, is also the promise of both quantum computing and quantum annealing. So the researchers spend some time in their paper explaining the distinctions between their p-bits and these two types of qubit. For one, we know how to make the magnetic tunnel junctions on a scale similar to existing transistors — these are based on a potential form of non-volatile memory. And rather than requiring operating conditions held at near absolute zero, they work at room temperature.

Disconnected

One factor that’s holding back both of these forms of quantum computing is managing the connections among the qubits. Qubits have to interact with each other in order to function as part of a computational unit, and those interactions can lead to forms of interference that can produce errors. Here, the connections are managed through standard circuit board wiring. The factoring algorithm is also very bit-efficient; a number n of p-bits can be used to factor numbers up to 2 (n 2) .

Errors also provide another potential advantage to p-bits. Quantum computing schemes have two options for dealing with them: either include extra qubits to allow error correction or simply run the computation multiple times and take the typical results. As we already noted, making and connecting qubits is a challenge; connecting enough to do error correction as well greatly amplifies that challenge. And because of its nature, probabilistic computing already involves sampling multiple results of the calculation.

In fact, the system is robust enough that different p-bits can be put through the feedback process at any time; if they’re temporarily in the wrong state, it will all wash out in the averaging. This means the feedback process can be handled asynchronously, so that aspect of the computation shouldn’t need to scale up much with additional p-bits.

The real question, then, comes from the amount of sampling needed. Here, 15 seconds was easily enough to allow the correct factors to stand out above the related-but-wrong answers that the system also sampled. It’s possible that a larger number of p-bits will mean a significantly larger sampling time. Of course, if the alternative is using a system where providing an answer will take until never, even a larger sampling time might start looking appealing.

Nature, 2019. DOI:10. 1038 / s 41586 – 019 – 1557 – 9(About DOIs).

GIPHY App Key not set. Please check settings