· byMatthew Honnibal and Ines Montani

Version 2.2 of the spaCy Natural Language Processing library is leaner, cleaner and even more user-friendly. In addition to new model packages and features for training, evaluation and serialization, we’ve made lots of bug fixes, improved debugging and error handling, and greatly reduced the size of the library on disk.

While we’re grateful to the whole spaCy community for their patches and support, Explosion has been lucky to welcometwo new team memberswho deserve special credit for the recent rapid improvements: Sofie Van Landeghem and Adriane Boyd have been working on spaCy full-time. This brings thecore teamup tofour developers– so you can look forward to a lot more to come.

New models and data augmentation

spaCy v2.2comes with retrained statistical models, that include bug fixes andimproved performance over lower-cased texts. Like other statistical models, spaCy’s models can be sensitive to differences between the training data and the data you’re working with. One type of difference we’ve had a lot of trouble with is casing and formality: most of the training data we have is text that is fairly well edited, which has meant lower accuracy on texts which have inconsistent casing and punctuation.

To address this, we’ve begun developing a newdata augmentation system. The first feature we’ve introduced in the v2.2 models is a word replacement system that also supports paired punctuation marks, such as quote characters. During training, replacement dictionaries can be provided, with replacements made in a random subset of sentences each epoch. Here’s an example of the type of problem this change can help with. The German NER model is trained on a treebank that uses``as its open-quote symbol. When Wolfgang Seekerdeveloped spaCy’s German support, he used a preprocessing script that replaced some of those quotes with unicode or ASCII quotation marks. However, one-off preprocessing steps like that are easy to lose track of – eventually leading to a bug in the v2.1 German model. It’s much better to make those replacements during training, which is just what the new system allows you to do.

If you’re using thespacy traincommand, the new data augmentation strategy can be enabled with the new- orth-variant-levelparameter. We’ve set it to0.3by default, which means that 30% of the occurrences of some tokens are subject to replacement during training. Additionally, if an input is randomly selected for orthographic replacement, it has a 50% chance of also being forced to lower-case. We’re still experimenting with this policy, but we’re hoping it leads to models that are more robust to case variation. Let us know how you find it! More APIs for data augmentation will be developed in future, especially as we get more evaluation metrics for these strategies into place.

We’re also pleased to introduce pretrained models fortwo additional languages :NorwegianandLithuanian. Accuracy on both of these languages should improve in subsequent releases, as the current models make use of neither pretrained word vectors nor thespacy pretraincommand. The addition of these languages has been made possible by the awesome work of the spaCy community, especiallyTokenMillfor the Lithuanian model, and theUniversity of Oslo Language Technology Groupfor Norwegian. We’ve been adopting a cautious approach to adding new language models, as we want to make sure that once a model is added, we can continue to support it in each subsequent version of spaCy. That means we have to be able to train all of the language models ourselves, because subsequent versions of spaCy won’t necessarily be compatible with the previous suite of models. With steady improvements to our automation systems and newteam membersjoining spaCy, we look forward to addingWhy not more models ?TheUniversal Dependenciescorpora make it reasonably easy to distribute models for a much wider variety of languages. However, most UD-trained models aren’t that useful for practical work. The UD corpora tend to be small, CC BY-NC licensed, and they tend not to provide NER annotations. To avoid breaking backwards compatibility, we’re trying to only roll out new languages once we have models that are a bit more ready for use.

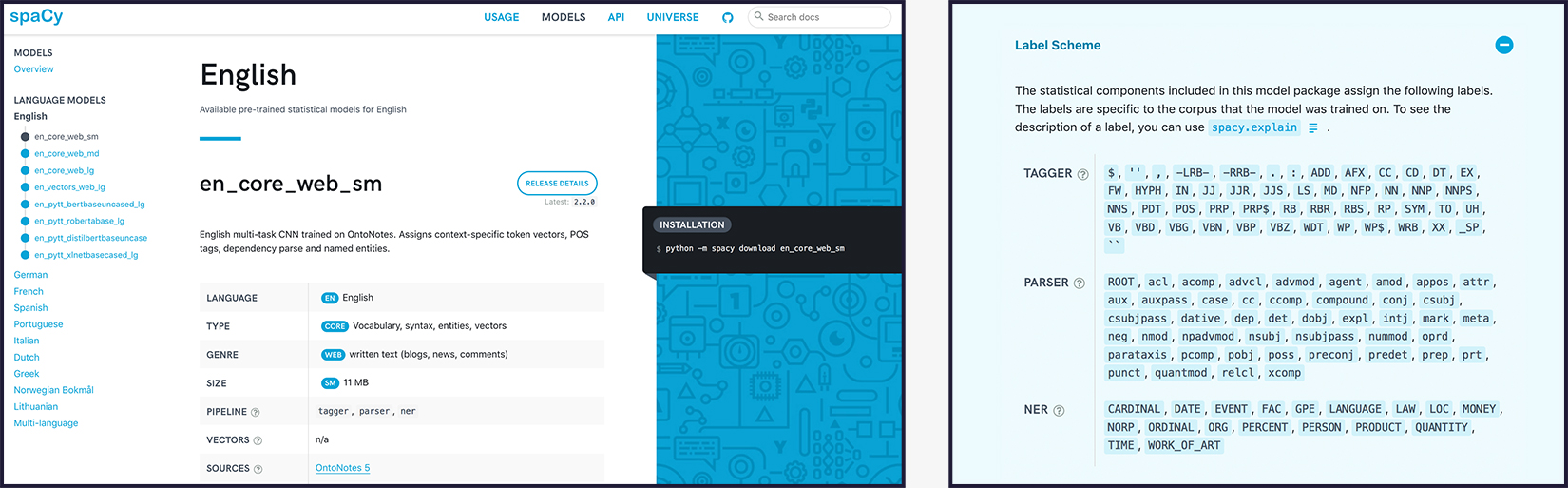

Better Dutch NER with 20 categories

Our friends atNLP Townhave been making some great contributions to spaCy’s Dutch support. For v2.2, they’ve gone even further, andannotated a new datasetthat should make the pretrained DutchNERmodel much more useful. The new dataset provides OntoNotes 5 annotations over theLaSSy corpus. This allows us to replace the semi-automatic Wikipedia NER model with one trained ongold-standard entities of 20 categories. You can see the updated results in our new and improvedmodels directory, that now shows more detail about the different models, including the label scheme. At first glance the new model might look worse, if you only look at the evaluation figures. However, the previous evaluation was conducted on the semi-automatically created Wikipedia data, which makes it much easier for the model to achieve high scores. The accuracy of the model should improve further when we add pretrained word vectors and when we wire in support for thespacy pretraincommandinto our model training pipeline.

New CLI features for training

spaCy v2.2 includes several usability improvements to thetr aining and data development workflow, especially for text categorization. We’ve improved error messages, updated the documentation, and made the evaluation metrics more detailed – for example, the evaluation now provides per-entity-type and per-text-categoryaccuracy statisticsby default. One of the most useful improvements is integrated support for the text categorizer in thespacy traincommand line interface. You can now write commands like the following, just as you would when training the parser, entity recognizer or tagger:

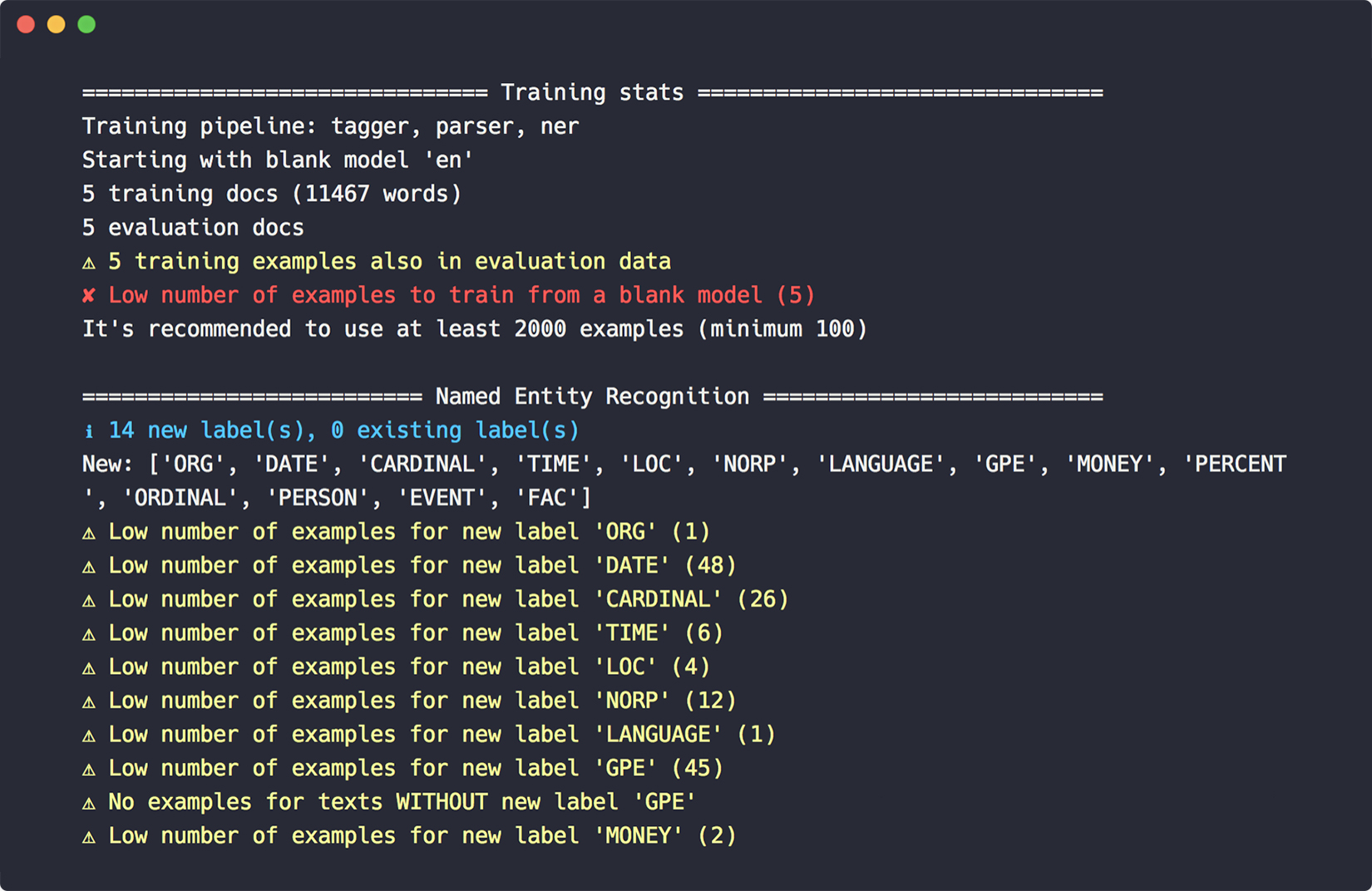

python -m spacy train en / output / train / dev --pipeline textcat --textcat-arch simple_cnn --textcat-multilabelYou can read more about the data format requiredin the API docs. To make training even easier, we’ve also introduced a newdebug-datacommand, tovalidate your training and development data, get useful stats, and find problems like invalid entity annotations, cyclic dependencies, low data labels and more . Checking your data before training should be a huge time-saver, as it’s never fun to hit an error after hours of training.

debug-datacommandSmaller disk foot-print, better language resource handling

As spaCy has supportedmore languages , the disk footprint has crept steadily upwards, especially when support was added for lookup-based lemmatization tables. These tables were stored as Python files, and in some cases became quite large. We’ve switched these lookup tables over to gzipped JSON and moved them out to a separate package,spacy-lookups-data, that can be installed alongside spaCy if needed. Depending on your system, your spaCy installation should now be5 – 10 × smaller.

pip install -U spacy [lookups]When do I need the lookups package?Pretrained modelsalready include their data files, so you only need to install the lookups data if you want to use lemmatization for languages that don’t yet come with a pre-trained model and aren ‘ t powered by third-party libraries, or if you want to create blank models usingspacy.blankand want them to include lemmatization rules and lookup tables.

Under the hood, large language resources are now powered by a consistent(Lookups) ********************** (API) that you can also take advantage of when writing custom com ponents. Custom components often need lookup tables that are available to theDoc,TokenorSpanobjects. The natural place for this is in the sharedVocab– that’s exactly the sort of thing theVocabobject is for. Now custom components can place data there too, using the new lookups API.

DocBin for efficient serialization

Efficient serialization is very important for large-scale text processing. For many use cases, a good approach is to serialize a spaCyDocobject as a numpy array, using theDoc.to_arraymethod. This lets you select the subset of attributes you care about, making serialization very quick. However, this approach does lose some information. Notably, all of the strings are represented as 64 – bit hash values, so you’ll need to make sure that the strings are available in your other process when you go to deserialize theDoc.

The newDocBinclasshelps youefficiently serialize and deserializea collection ofDocobjects, taking care of lots of details for you automatically. The class should be especially helpful if you’re working with a multiprocessing library likeDask. Here’s a basic usage example:

import spacy from spacy.tokens import DocBin doc_bin=DocBin (attrs=["LEMMA", "ENT_IOB", "ENT_TYPE"], store_user_data=True) texts=["Some text", "Lots of texts...", "..."] nlp=spacy.load ("en_core_web_sm") for doc in nlp.pipe (texts): doc_bin.add (doc) bytes_data=docbin.to_bytes () # Deserialize later, e.g. in a new process nlp=spacy.blank ("en") doc_bin=DocBin (). from_bytes (bytes_data) docs=list (doc_bin.get_docs (nlp.vocab))Internally, theDocBinconverts eachDocobject to a numpy array, and maintains the set of strings needed for all of theDocobjects it’s managing. This means the storage will be more efficient per document the more documents you add – because you get to share the strings more efficiently. The serialization format itself is gzipped msgpack, which should make it easy to extend the format in future without breaking backwards compatibility.

10 × faster phrase matching

(spaCy’sPhraseMatcherclass gives you an efficient way to perform (exact-match search (with a potentially) huge number of queries. It was designed for use cases like finding all mentions of entities in Wikipedia, or all drug or protein names from a large terminology list. The algorithm thePhraseMatcherused was a bit quirky: it exploited the fact that spaCy’sTokenobjects point toLexemestructs that are shared across all instances. Words were marked as possibly beginning, within, or ending at least one query, and then the Matcher object was used to search over these abstract tags, with a final step filtering out the potential mismatches.

The key benefit of the previousPhraseMatcheralgorithm is how well it scales to large query sets. However, it wasn’t necessarily that fast when fewer queries were used – making its performance characteristics a bit unintuitive – especially since the algorithm is non-standard, and relies on spaCy implementation details. Finally, its reliance on these details has introduced a number of maintainence problems as the library has evolved, leading to some subtle bugs that caused some queries to fail to match. To fix these problems, v2.2 replaces thePhraseMatcherwith a more straight-forward trie-based algorithm. Because the search isperformed over tokens instead of characters, matching is very fast – even before the implementation was optimized using Cython data structures. Here’s a quick benchmark searching over 10, 000 Wikipedia articles.

| # queries | # matches | v2.1.8 (seconds) | v2.2.0 (seconds) |

|---|---|---|---|

| 10 | |||

| 100 | 795 | 0. 028 | |

| 100 | 795 | ||

| 11, 376 | 0. 512 | 0. 043 | |

| 10, 000 | 105, 688 | 0. 114 |

When few queries are used, the new implementation is almost20 × faster– and it’s still almost 5 x faster when 10 , 000 queries are used. The runtime of the new implementation roughly doubles for every order of magnitude increase in the number of queries, suggesting that the runtimes will be about even at around 1 million queries. However, the previous algorithm’s runtime was mostly sensitive to the number of matches (both full and partial), rather than the number of query phrases – so it really depends on how many matches are being found. You might have some query sets that produce a high volume of partial matches, due to queries that begin with common words such as “the”. The new implementation should perform much more consistently, and we expect it to be faster in almost every situation. If you do have a use-case where the previous implementation was performing better, please let us know.

(New video series) ************** ()

In case you missed it, you might also be interested in the new beginner-orientedvideo tutorial serieswe’re producing, in collaboration with data science instructorVincent Warmerdam. Vincent is building a a system to automatically detect programming languages in large volumes of text. You can follow his process from the first idea to a prototype all the way to data collection andtraining a statistical named entity recogntion modelfrom scratch.

We’re excited about this series because we’re trying to avoid a common problem with tutorials. Most tutorials only ever show you the “happy path”, of everything working out exactly as the authors intended it. The problem’s much bigger and more fundamental than technology: there’s a reason that“draw the rest of the owl “memeresonates soWidely. The best way to avoid this problem is to turn the pencil over to someone else, so you can really see the process. Two episodes have already been released, and there’s a lot more to come!

GIPHY App Key not set. Please check settings