Bokehlicious Selfies

I signed up for the excellent (fastai MOOC) recently, and one of the project ideas I had was the idea of adding bokehs [0. 0. 0. 0.02 0.021 0.022 0.021 0.02 0. 0. 0. ]

to selfies using Deep learning. Most phones have a not so great selfie (front-side) camera and therefore this idea has some merits.

Google Photos does something like this and it’s quite magical when it works . So i wanted to experiment with a simple pipeline which could be used to add a bokeh to a selfie that did not have one.

Breaking down the problem

One idea I had was to be able to use image-segmentation to identify and build a segmentation mask [0. 0. 0. 0.02 0.021 0.022 0.021 0.02 0. 0. 0. ] around the person in the image. For this I used the excellent torchvision.models.detection.maskrcnn_resnet _ fpn pretrainted model. This model has been trained with the COCO dataset , and therefore is pretty great out of the box for the given use-case.

Once we have a segmentation (mask) of the person in the image; we could then use that to split the image into a foreground or a (subject) , and the rest of it would be background I could then use image convolution to create a bokeh (effect on the [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] (background) image and merge it with the subject to give it a nice pop.

One key thing to remember is that the [0. 0. 0. 0.02 0.021 0.022 0.021 0.02 0. 0. 0. ] (merged) (image is) (only) as good as the segmentation mask , but given I am restricting the input image type to a portrait selfie this works most [0. 0. 0. 0. 0.02 0.02 0.02 0. 0. 0. 0. ] of the time. Let's write some code The Bokeh Effect

I read this [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] incredible article [channels_first] on how to simulate a bokeh

effect. I then adapted the idea and wrote a quick Python (implementation using some helpers from [0. 0. 0. 0.02 0.021 0.022 0.021 0.02 0. 0. 0. ] (OpenCV) .

Let's start with our imports.

import cv2 import math import numpy as np import matplotlib.pyplot as plt plt.rcParams ["figure.figsize"]=(, ) np.set_printoptions (precision=3)

We need to build a convolution (kernel) which can produce a bokeh effect. The idea here is to take a gaussian kernel with a large standard-deviation and multiply it with a simple binary mask to emphasize the effect.

triangle=np.array ([ [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], [0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0], [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0], [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0], [0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0], [0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0], [0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0], [0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0], [0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0], [0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0], [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1], ], dtype='float') mask=triangle kernel=cv2.getGaussianKernel (, 5.) kernel=kernel kernel.transpose () mask # Is the 2D filter kernel=kernel / np.sum (kernel) print (kernel)

This produces something like:

[0. 0. 0. 0. 0. 0.016 0. 0. 0. 0. 0. ] [0. 0. 0. 0. 0. 0.016 0. 0. 0. 0. 0. ] [0. 0. 0. 0. 0.018 0.018 0.018 0. 0. 0. 0. ] [0. 0. 0. 0. 0.02 0.02 0.02 0. 0. 0. 0. ] [0. 0. 0. 0.02 0.021 0.021 0.021 0.02 0. 0. 0. ] [0. 0. 0. 0.02 0.021 0.022 0.021 0.02 0. 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0.012 0.013 0.015 0.016 0.016 0.016 0.015 0.013 0.012 0. ]

Let's try the [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] (kernel) . First, lets load the input image: # Credit for the image: https://fixthephoto.com/self-portrait-ideas. html image=cv2.imread ('images / selfie-1.jpg') image=cv2.cvtColor (image, cv2.COLOR_BGR2RGB) plt.imshow (image) [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0]

Now, let's define the actual (bokeh) function that applies the kernel.

def bokeh (image): r, g, b=cv2.split (image) r=r / . g=g / . b=b / . r=np.where (r> 0.9, r 2, r) g=np.where (g> 0.9, g 2, g) b=np.where (b> 0.9, b 2, b) fr=cv2.filter2D (r, -1, kernel) fg=cv2.filter2D (g, -1, kernel) fb=cv2.filter2D (b, -1, kernel) fr=np.where (fr> 1., 1., fr) fg=np.where (fg> 1., 1., fg) fb=np.where (fb> 1., 1., fb) result=cv2.merge ((fr, fg, fb)) return result result=bokeh (image) plt.imshow (result)

We now have a method that can generate a (bokeh) effect for a given image.

Image Segmentation We now need to use the

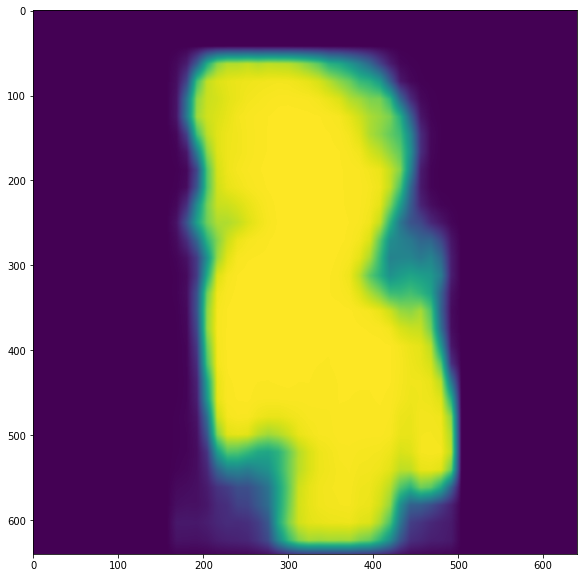

torchvision.models.detection.maskrcnn_resnet [0. 0.012 0.013 0.015 0.016 0.016 0.016 0.015 0.013 0.012 0. ] (_ fpn) (pretrained model to segment the above image to split into foreground [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] & background Let's do that. import torch import torchvision model=torchvision.models.detection.maskrcnn_resnet _ fpn (pretrained=True) model.eval () image=cv2.imread ('images / selfie-1.jpg') image=cv2.cvtColor (original, cv2.COLOR_BGR2RGB) # OpenCV uses BGR by default image=image / . # Normalize image channels_first=np.moveaxis (image, 2, 0) # Channels first # The pre-trained model expects a float type channels_first=torch.from_numpy (channels_first) .float () prediction=model ([0. 0.012 0.013 0.015 0.016 0.016 0.016 0.015 0.013 0.012 0. ]) [channels_first] scores=prediction [channels_first]. detach (). numpy () masks=prediction . detach (). numpy () mask=masks [channels_first] [channels_first] plt.imshow (masks ['scores'] [channels_first]) () This produces a segmentation-mask which looks like:

Splitting & Merging

Splitting & Merging

Now that we have a segmentation-mask we can split the image into foreground and background like so: inverted=np.abs (1. mask) r, g, b=cv2.split (image) mr=r mask mg=g mask mb=b mask subject=cv2.merge ((mr, mg, mb)) ir=r inverted ig=g inverted ib=b inverted background=cv2.merge ((ir, ig, ib)) subject=np.asarray (subject ., dtype='uint8') plt.imshow (subject)

Let's now apply the (bokeh) effect on the background (image and them merge both images.) background_bokeh=bokeh (np.asarray (background , dtype='uint8')) background_bokeh=np.asarray (background_bokeh , dtype='uint8') combined=cv2.addWeighted (subject, 1., background_bokeh, 1., 0) plt.imshow (combined)

Conclusion

Conclusion

Deep learning is magical for applications like these. I hope you enjoyed reading the article.

(Read More)

Google Photos does something like this and it’s quite magical when it works . So i wanted to experiment with a simple pipeline which could be used to add a bokeh to a selfie that did not have one.

Breaking down the problem

One idea I had was to be able to use image-segmentation to identify and build a segmentation mask [0. 0. 0. 0.02 0.021 0.022 0.021 0.02 0. 0. 0. ] around the person in the image. For this I used the excellent torchvision.models.detection.maskrcnn_resnet _ fpn pretrainted model. This model has been trained with the COCO dataset , and therefore is pretty great out of the box for the given use-case.

One key thing to remember is that the [0. 0. 0. 0.02 0.021 0.022 0.021 0.02 0. 0. 0. ] (merged) (image is) (only) as good as the segmentation mask , but given I am restricting the input image type to a portrait selfie this works most [0. 0. 0. 0. 0.02 0.02 0.02 0. 0. 0. 0. ] of the time. Let's write some code The Bokeh Effect

I read this [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] incredible article [channels_first] on how to simulate a bokeh

effect. I then adapted the idea and wrote a quick Python (implementation using some helpers from [0. 0. 0. 0.02 0.021 0.022 0.021 0.02 0. 0. 0. ] (OpenCV) .

Let's start with our imports.

import cv2 import math import numpy as np import matplotlib.pyplot as plt plt.rcParams ["figure.figsize"]=(, ) np.set_printoptions (precision=3)

We need to build a convolution (kernel) which can produce a bokeh effect. The idea here is to take a gaussian kernel with a large standard-deviation and multiply it with a simple binary mask to emphasize the effect.

triangle=np.array ([ [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], [0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0], [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0], [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0], [0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0], [0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0], [0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0], [0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0], [0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0], [0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0], [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1], ], dtype='float') mask=triangle kernel=cv2.getGaussianKernel (, 5.) kernel=kernel kernel.transpose () mask # Is the 2D filter kernel=kernel / np.sum (kernel) print (kernel)

This produces something like:

[0. 0. 0. 0. 0. 0.016 0. 0. 0. 0. 0. ] [0. 0. 0. 0. 0. 0.016 0. 0. 0. 0. 0. ] [0. 0. 0. 0. 0.018 0.018 0.018 0. 0. 0. 0. ] [0. 0. 0. 0. 0.02 0.02 0.02 0. 0. 0. 0. ] [0. 0. 0. 0.02 0.021 0.021 0.021 0.02 0. 0. 0. ] [0. 0. 0. 0.02 0.021 0.022 0.021 0.02 0. 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0.012 0.013 0.015 0.016 0.016 0.016 0.015 0.013 0.012 0. ]

Let's try the [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] (kernel) . First, lets load the input image: # Credit for the image: https://fixthephoto.com/self-portrait-ideas. html image=cv2.imread ('images / selfie-1.jpg') image=cv2.cvtColor (image, cv2.COLOR_BGR2RGB) plt.imshow (image) [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0]

Now, let's define the actual (bokeh) function that applies the kernel.

def bokeh (image): r, g, b=cv2.split (image) r=r / . g=g / . b=b / . r=np.where (r> 0.9, r 2, r) g=np.where (g> 0.9, g 2, g) b=np.where (b> 0.9, b 2, b) fr=cv2.filter2D (r, -1, kernel) fg=cv2.filter2D (g, -1, kernel) fb=cv2.filter2D (b, -1, kernel) fr=np.where (fr> 1., 1., fr) fg=np.where (fg> 1., 1., fg) fb=np.where (fb> 1., 1., fb) result=cv2.merge ((fr, fg, fb)) return result result=bokeh (image) plt.imshow (result)

We now have a method that can generate a (bokeh) effect for a given image.

Image Segmentation We now need to use the

torchvision.models.detection.maskrcnn_resnet [0. 0.012 0.013 0.015 0.016 0.016 0.016 0.015 0.013 0.012 0. ] (_ fpn) (pretrained model to segment the above image to split into foreground [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] & background Let's do that. import torch import torchvision model=torchvision.models.detection.maskrcnn_resnet _ fpn (pretrained=True) model.eval () image=cv2.imread ('images / selfie-1.jpg') image=cv2.cvtColor (original, cv2.COLOR_BGR2RGB) # OpenCV uses BGR by default image=image / . # Normalize image channels_first=np.moveaxis (image, 2, 0) # Channels first # The pre-trained model expects a float type channels_first=torch.from_numpy (channels_first) .float () prediction=model ([0. 0.012 0.013 0.015 0.016 0.016 0.016 0.015 0.013 0.012 0. ]) [channels_first] scores=prediction [channels_first]. detach (). numpy () masks=prediction . detach (). numpy () mask=masks [channels_first] [channels_first] plt.imshow (masks ['scores'] [channels_first]) () This produces a segmentation-mask which looks like:

Splitting & Merging

Splitting & Merging

Now that we have a segmentation-mask we can split the image into foreground and background like so: inverted=np.abs (1. mask) r, g, b=cv2.split (image) mr=r mask mg=g mask mb=b mask subject=cv2.merge ((mr, mg, mb)) ir=r inverted ig=g inverted ib=b inverted background=cv2.merge ((ir, ig, ib)) subject=np.asarray (subject ., dtype='uint8') plt.imshow (subject)

Let's now apply the (bokeh) effect on the background (image and them merge both images.) background_bokeh=bokeh (np.asarray (background , dtype='uint8')) background_bokeh=np.asarray (background_bokeh , dtype='uint8') combined=cv2.addWeighted (subject, 1., background_bokeh, 1., 0) plt.imshow (combined)

Conclusion

Conclusion

Deep learning is magical for applications like these. I hope you enjoyed reading the article.

(Read More)

import cv2 import math import numpy as np import matplotlib.pyplot as plt plt.rcParams ["figure.figsize"]=(, ) np.set_printoptions (precision=3)

We need to build a convolution (kernel) which can produce a bokeh effect. The idea here is to take a gaussian kernel with a large standard-deviation and multiply it with a simple binary mask to emphasize the effect.

triangle=np.array ([ [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], [0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0], [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0], [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0], [0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0], [0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0], [0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0], [0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0], [0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0], [0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0], [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1], ], dtype='float') mask=triangle kernel=cv2.getGaussianKernel (, 5.) kernel=kernel kernel.transpose () mask # Is the 2D filter kernel=kernel / np.sum (kernel) print (kernel)

This produces something like:

[0. 0. 0. 0. 0. 0.016 0. 0. 0. 0. 0. ] [0. 0. 0. 0. 0. 0.016 0. 0. 0. 0. 0. ] [0. 0. 0. 0. 0.018 0.018 0.018 0. 0. 0. 0. ] [0. 0. 0. 0. 0.02 0.02 0.02 0. 0. 0. 0. ] [0. 0. 0. 0.02 0.021 0.021 0.021 0.02 0. 0. 0. ] [0. 0. 0. 0.02 0.021 0.022 0.021 0.02 0. 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] [0. 0.012 0.013 0.015 0.016 0.016 0.016 0.015 0.013 0.012 0. ]

Let's try the [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] (kernel) . First, lets load the input image: # Credit for the image: https://fixthephoto.com/self-portrait-ideas. html image=cv2.imread ('images / selfie-1.jpg') image=cv2.cvtColor (image, cv2.COLOR_BGR2RGB) plt.imshow (image) [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0]

Now, let's define the actual (bokeh) function that applies the kernel.

def bokeh (image): r, g, b=cv2.split (image) r=r / . g=g / . b=b / . r=np.where (r> 0.9, r 2, r) g=np.where (g> 0.9, g 2, g) b=np.where (b> 0.9, b 2, b) fr=cv2.filter2D (r, -1, kernel) fg=cv2.filter2D (g, -1, kernel) fb=cv2.filter2D (b, -1, kernel) fr=np.where (fr> 1., 1., fr) fg=np.where (fg> 1., 1., fg) fb=np.where (fb> 1., 1., fb) result=cv2.merge ((fr, fg, fb)) return result result=bokeh (image) plt.imshow (result)

We now have a method that can generate a (bokeh) effect for a given image.

Image Segmentation We now need to use the

torchvision.models.detection.maskrcnn_resnet [0. 0.012 0.013 0.015 0.016 0.016 0.016 0.015 0.013 0.012 0. ] (_ fpn) (pretrained model to segment the above image to split into foreground [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] & background Let's do that. import torch import torchvision model=torchvision.models.detection.maskrcnn_resnet _ fpn (pretrained=True) model.eval () image=cv2.imread ('images / selfie-1.jpg') image=cv2.cvtColor (original, cv2.COLOR_BGR2RGB) # OpenCV uses BGR by default image=image / . # Normalize image channels_first=np.moveaxis (image, 2, 0) # Channels first # The pre-trained model expects a float type channels_first=torch.from_numpy (channels_first) .float () prediction=model ([0. 0.012 0.013 0.015 0.016 0.016 0.016 0.015 0.013 0.012 0. ]) [channels_first] scores=prediction [channels_first]. detach (). numpy () masks=prediction . detach (). numpy () mask=masks [channels_first] [channels_first] plt.imshow (masks ['scores'] [channels_first]) () This produces a segmentation-mask which looks like:

Splitting & Merging

Splitting & Merging

Now that we have a segmentation-mask we can split the image into foreground and background like so: inverted=np.abs (1. mask) r, g, b=cv2.split (image) mr=r mask mg=g mask mb=b mask subject=cv2.merge ((mr, mg, mb)) ir=r inverted ig=g inverted ib=b inverted background=cv2.merge ((ir, ig, ib)) subject=np.asarray (subject ., dtype='uint8') plt.imshow (subject)

Let's now apply the (bokeh) effect on the background (image and them merge both images.) background_bokeh=bokeh (np.asarray (background , dtype='uint8')) background_bokeh=np.asarray (background_bokeh , dtype='uint8') combined=cv2.addWeighted (subject, 1., background_bokeh, 1., 0) plt.imshow (combined)

Conclusion

Conclusion

Deep learning is magical for applications like these. I hope you enjoyed reading the article.

(Read More)

Let's try the [0. 0. 0.017 0.019 0.02 0.02 0.02 0.019 0.017 0. 0. ] (kernel) . First, lets load the input image: # Credit for the image: https://fixthephoto.com/self-portrait-ideas. html image=cv2.imread ('images / selfie-1.jpg') image=cv2.cvtColor (image, cv2.COLOR_BGR2RGB) plt.imshow (image) [0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0] Now, let's define the actual (bokeh) function that applies the kernel. We now have a method that can generate a (bokeh) effect for a given image. We now need to use the This produces a segmentation-mask which looks like: Now that we have a segmentation-mask we can split the image into foreground and background like so: background (image and them merge both images.) background_bokeh=bokeh (np.asarray (background , dtype='uint8')) background_bokeh=np.asarray (background_bokeh , dtype='uint8') combined=cv2.addWeighted (subject, 1., background_bokeh, 1., 0) plt.imshow (combined) Deep learning is magical for applications like these. I hope you enjoyed reading the article.

Splitting & Merging

Splitting & Merging

Conclusion

Conclusion

GIPHY App Key not set. Please check settings