Fast HSV to RGB Conversion

When small CPUs need to do work

Index

- Introduction

- Color models

- HSV calculation background

- HSV calculation 256 / 256 / 256

- Allowing larger multiplication (8x) , (x) , (x) )

- Code implementations

- Conclusion

- Appendix: Floating point calculation

Introduction

Many people use small small micro controllers. These small machines are fantastic for doing a specific job. We also see a lot of blinkenlights, especially with the Arduinos and lots of enthusiastic people hacking their merry way. Most of us have become accustomed with RGB LEDs and a large group has had experience with the lovely simple WS 2812 LED all-in-one chip.

An often encountered a problem with color LEDs is the use of RGB color control. The RGB color-space is a non-intuitive way of controlling colors and is often difficult to handle without a significant amount of code and non-linear thinking. There are many ways to think about colors and one abstraction is using a different color-space. Handling colors in color-spaces can range from simple to rather difficult. Just do a search in the web to see how much is written about colors and color-spaces.

Using a different color-space than RGB makes codingfunctionalitymuch easier, but it comes at the expense of a more or less complex calculation to convert the color-space used in coding to the LED controlling RGB color-space. Therein also lies the problem, small micro controllers are unable to perform complex calculations without significant resources, which usually means increased calculation time.

Creating fast and small code is still relevant today, even with the availability of 32 – bit commodity MCUs and CPUs like the ARM based chips from all over. Fast code has an advantage; you can do more with less. That is always a nice. Big chips come at a price, especially when you need hardware floating point. You just have to think smart while coding when it is preferred to choose Cheaper chips.

It is advantageous to read the Wikipedia entry onHSV and HSL before proceeding to the calculation section. It is assumed that you are reasonably familiar with the C language and types, binary integer calculations and the associated overflow behavior in CPUs and compiler generated code.

If you are not interested in the backgrounds and just want some code, then you maygo there immediately.

Color models

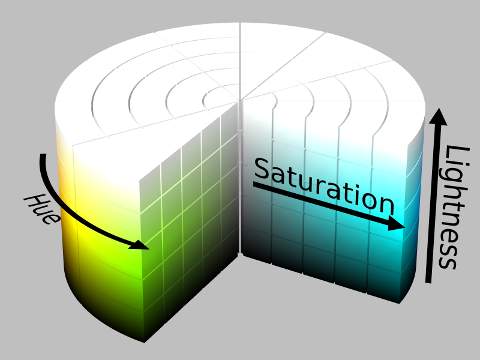

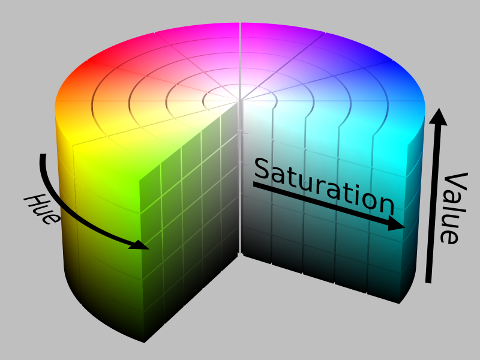

LED light is an additive process to create colors. Two simple additive color-models are (seefigure 1):

- HSL – Hue Saturation Lightness

- HSV – Hue Saturation Value

|

|

Using a different color-space than RGB makes handling color gradients much more easy and more intuitive. However, the calculation can be quite hard for a small CPU. Conversion normally use floating point, which is something small CPUs do not have. Emulation is possible, but take a disproportionate amount of time. The calculation can be hard, even when using integers. The very small CPUs cannot do division at the instruction level and most have no hardware multiplication either. Some smart code is required.

The HSV model is the most simple and easiest model to work with. The disadvantage of HSV in comparison to HSL is the intensity (the lightness) when mapping for example photographic images. However, the simplicity of HSV makes up for most if not all deficits and photographic accuracy is rarely an issue in blinkenlights projects. Using HSV in your next blinkenlights project makes that rainbow on the strip a piece of colorful cake.

A mapping from HSV to RGB on 8-bit micro controllers should preferably use the smallest types available (8-bit). Using small and native sizes will most likely keep the generated code small. At the same time, a reasonably good accuracy must be achieved with these limited resources and calculations should be as fast as possible. That said, the algorithm should not exclude larger CPUs if not necessary to do so. Portability if a great feature that must be achieved is

HSV calculcation background

The calculation for HSV is very straight forward (see also HSL and HSV). The calculation can be illustrated as shown infigure 2. There are only four calculations in the HSV to RGB conversion and only three are required for a particular conversion at any time ({1, 2 and 3} or {1, 2, and 4}). The chroma (maximum distance between any RGB values) is (chroma=V – V cdot (1 – S)=V cdot S ). All equations necessary for the conversion are:

( begin {align *} level_ {bottom} &=V cdot (1.0 – S) & (1) \ level_ {top} &=V & (2) \ slope_ {down} &=V cdot (1.0 – S cdot H_ Delta) & (3) \ slope_ {up} &=V cdot (1.0 – S cdot (1.0 – H_ Delta)) & (4) end {align *} )

The magnitude of the values for HSV are:

- (0.0 le H lt 360 .0 ) and (H_ Delta={{H mod 60 0} over {60. 0}} ) ( Rightarrow 0.0 le H_ Delta lt 1.0 )

- (0.0 le S le 1.0 )

- (0.0 le V le 1.0 )

The RGB channels are assigned values from the above four equation depending on the sextant of the hue value with (sextant : equiv : int (H / 60 .0) ). The scaling of the resulting values is (0.0 le {R, G, B } le 1.0 ). It should be clear that the conversion calculation uses floating point when you look at these equations and numbers.

The usual scaling for integer level RGB values are (0 le {R, G, B } le 255 ), which is exactly an 8-bit unsigned integer value. It is a logical step to scale both (S ) and (V ) equally, so that may be encapsulated by an 8-bit unsigned integer with (0.0 : hat {=} : 0 ) and (1.0 : hat {=} : 255 ) such that (0 le S le 255 ) and (0 le V le 255 ).

Doing integer calculation will always result in loss of accuracy for a generic non-integer calculation. This cannot be prevented, unless the numbers are very specific, which they are not. Therefore, it is expected to have some /- 1 error in the result. The trick is to balance the error generation and propagation such that it does not matter too much for the result, and at the same time to reduce the calculation’s complexity for fast execution.

The preferred variable types for HSV can be determined on the basis of the equations and the knowledge of the target RGB values. From equation 2 it follows that there is a one-to-one mapping, and combined with byte-sized RGB means that both S and V should be byte-sized. The hue parameter spans six sextants. Mapping hue to a byte would reduce the possible RGB values because many HSV combinations map to the same converted color. The slope of each sextant covers the entire range of the RGB value, implying to use a byte for each sextant, resulting in a 16 – bit type for hue.

Calculation complexity

The conversion between color spaces on small 8-bit CPUs should preferably be done using 8-bit math. The native size is the fastest way for those CPUs to do calculations. The goal is to devise an algorithm that is both as fast and as accurate as possible within the constraints of a small 8-bit CPU.

Using 8-bit math (and a little 16 – bit math) will result is small errors. The first source of error consist of rounding and truncation errors due to finite sizes. The second source can be found in calculation shortcuts. The algorithm must be optimized for small CPUs and that means that some calculations are more difficult than others. The difficult ones can often be replaced if some error is accepted. The trick is to reduce the error to a minimal level.

HSV calculation 256 / 256 / 256

Mapping bottom level (equation 1)

Equation 1 with scaling can be written as follows:

(level_ {bottom}={255 cdot Big [ {V over 255} cdot Big( 1.0 – {S over 255} Big) Big]}={{V cdot (255 – S)} over {255}} )

The equation can be partially rewritten because (255 – S= overline {S} ) which is the one’s complement of (S ) in 8-bit integer calculation. The multiplication is ( text {8-bit} times text {8-bit}= text {16 – bit} ), with the complication that the maximum values are 255 and the result reaches only a maximum of 0xfe 01. The correct scaling factor in the calculation is (1 / 255 ), but that requires a rather awkward division. A much better division would use a power of two and result in a division by 256: (level_ {bottom}={{V cdot (255 – S)} over {256}} ). A division by 256 can be implemented as a simple shift operation. Using 256 in the division also has the advantage of being a multiple of 8 bits, which in turn is a complete byte. This enables us to ignore the LSByte of the multiplication result completely and just go with the MSByte. However, the result isnot exactand only reaches a level of (255 / 256 ) from the target.

A compensation for the wrong division is to lift the numerator with a (1 / 256 ) part of the original calculation. The wrong division introduces an error factor of (255 / 256 approx 0. 9961 ). The effective result with compensation becomes (255 / 256 255 / (256 cdot 256) approx 0. 9999955 ). A final correction is to lift the rounding error of the division by adding 1 to the nominator. The result has no residual error and maps perfectly (see also appendix aboutfloating point calculation).

(level_ {bottom}={{V cdot ( (- S) {{V cdot) 255 – S)} over {256}} 1} over {256}} )

The equation is easily implemented because the correction is self-similar. The advantage of a self-similar correction is the ability to use an intermediate calculation result.

Equation 1 can be written in C as:

uint8_tv;uint8_ts; (uint) _tnumerator;uint8_tlvl_bot; numerator=v * (uint8_t) (~ s); numerator =numerator>>8; numerator =1; lvl_bot=numerator>>8;

The order of execution of the 1 corrective addition and the self-similar correction turns out to be insignificant. Normally it is important to perform these types of correction in the right order, but there is no difference in this case. The order of the 1 addition is only significant if a) a carry occurs between the two bytes of the 16 – bit multiplication result, and b) this simultaneously results in a secondary byte-level carry which would otherwise not occur. However, this is not the case because the addition that would cause the carry will do so anyway, whether it is done as first or secondary carry. There is code-wise no performance advantage in executing the 1 addition first or second.

Monochromatic values

Monochromatic values in the HSV cylinder are those where the saturation is zero. This is a special case, where a perfect mapping can be achieved by bypassing the calculation and assigning the RGB values to be equal to the V parameter. Even though equation 1 maps perfectly, the other equations might not. Any set of HSV values where (S=0 ) are trivially detected and can simply be handled separately.

(Mapping top level) 2)

Equation 2 is simply a one-to-one mapping of the (V ) parameter scaled at 8-bit unsigned integer level. Equation 2 can be written in C as:

uint8_tv;uint8_tlvl_top; lvl_top=v;

There is obviously no error in the resulting mapping of values for the top-level.

Mapping slopes (equation 3 and 4)

The slope equations are the only ones that have the hue as a parameter. The hue is the angle on the cylinder, which is divided into 6 sextants. The normalization of the angle ensures (0.0 le H _ { Delta} lt 1.0 ). Please note that the normalized angle never reaches one. Parameter (V ) and (S ) are already mapped to scale to 255. The scale of (H_ Delta ) is done in a similar way as (S ) and (V ), with the hue sextant mapping to fit into an 8-bit unsigned integer value, using (0 le H _ { Delta} lt 256 ). The mapping implies that 1.0 is mapped to 256 Because (H_ Delta ) never reaches one and therefore always fits in an 8-bit unsigned integer:

( begin {align} slope_ {down} &=255 cdot Big [ {V over 255} cdot Big(1.0 – {S over 255} cdot {H_{Delta} over 256}Big) Big] ={{V cdot Big (255 – {{ S cdot H _ { Delta}} over {256} } Big)} over {255}} \ & \ slope_ {up} &=255 cdot Big [ {V over 255} cdot Big(1.0 – {S over 255} cdot {{256 – H_{Delta}} over 256}Big) Big] ={{V cdot Big (255 – {{ S cdot (256 – H _ { Delta})} over {256}} Big)} over {255}} end {align} )

The primary problem in these equations is the larger error when the division is changed to (1 / 256 ) instead of (1 / 255 ). This is because there are two multiplications and divisions in sequence, which cause a compounded calculation error. The resulting error would be in the /- 2 range and not in the /- 1 range as preferred if unchanged.

Rescaling of any of the parameters (V ), (S ) or (H _ { Delta} ) is not really an option because scaling to other values than 0 … 255 would imply that an 8-bit type cannot hold the magnitude of the parameter. Therefore, a similar type of compensation as in the bottom level equation must be employed to balance the equations.

The first compensation is the same as in equation 1, where a self-similar correction is employed to compensate for the (255 / 256 ) factor. The second correction targets the compounded multiplication and lifts the numerator by a fraction of (V / 2 ). This second compensation balances the rounding error to be distributed evenly between under- and over-estimation by /- 1:

( begin {align} slope_ {down} &={{V cdot Big (255 – {{S cdot H _ { Delta} {{S cdot H _ { Delta}} over {256}}} over {256}} Big) {V over 2}} over {256}} \ & \ slope_ {up} &={{V cdot Big (255 – {{S cdot (256 – H _ { Delta}) {{S cdot) 256 – H_ { Delta})} over {256}}} over {256}} Big) {V over 2}} over {256}} end {align} )

The computation (S cdot 256 – H _ { Delta }) ) can be shortcut to prevent an expensive multiplication. The problem is appears when (H _ { Delta}=0 ), where the subtraction results in a 9-bit value (0x 100). All other subtraction results are 8-bit in size. However, the case of (H _ { Delta}=0 ) is trivially detected and a 16 – bit multiplication can be prevented (S ⋅ 256=S

As before, the calculation (255 – X ) is replaced with the one’s complement. The calculation (256 – X ) is replaced with the two’s complement equivalent using 8-bit calculation, resulting in (- X ). Finally, the division by 256 is done by shifting. The resulting code is then:

(uint8_t) v;uint8_ts; (uint) _th; (uint) _tnumerator;uint8_tslope_dn;uint8_tslope_up; numerator=s * h; numerator =numerator>>8; numerator=v * (uint8_t) (~ (uint8_t) (numerator>>8)); numerator =v>>1; slope_dn=numerator>>8; numerator=! h? (( (uint) _t) suint8_t) (- h)); numerator =numerator>>8; numerator=v * (uint8_t) (~ (uint8_t) (numerator>>8)); numerator =v>>1; slope_up=numerator>>8;

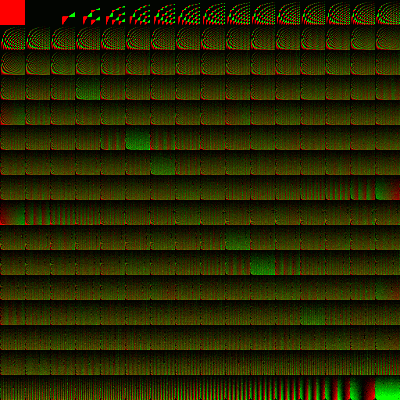

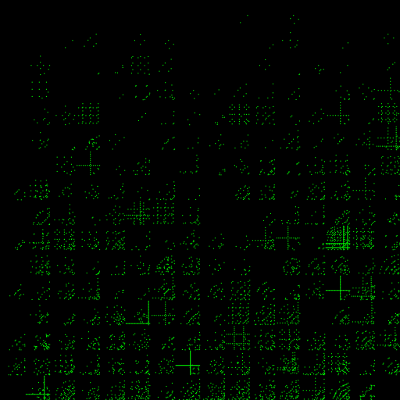

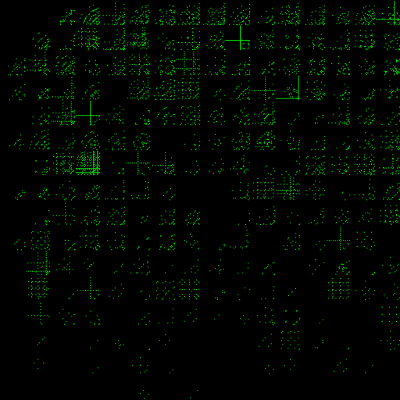

An error analysis of the slopes reveals the following maps:

|

|

All values have a maximum error of /- 1. The error of the slope down image is 6. 00% at the -1 level and 6. 16% at the 1 level. The error of the slope up image is 6. 36% at -1 and 6. 14% at 1. The map reveals that high value (V) will have the highest error concentrations. These errors are primarily in the more saturated color values and are usually in the bright region of intensities. Therefore, it should not result in any significant visual artifacts.

The up- and down-slopes differ and seem to be moved /- 1 for the (H _ { Delta} ) parameter. The reason for this behavior is that the slopes are calculated from each end ( (H _ { Delta} | _ {0 rightarrow 1} ) versus (H _ { Delta} | _ {1 rightarrow 0} )). The resulting shift in calculation causes (H _ { Delta} ) to vary between 0 … 255 for the down-slope and 256 … 1 for the up-slope, which moves the equations slightly with respect to each other (a permanent fix is to use thecalculation with larger resolution).

The artifact for (H _ { Delta}=0 ) in the down-slope, which is consistently at -1, can be compensated if necessary. However, fixing it requires an awkward conditional in the code that makes it run slower. The reason for the -1 error can be found in the fact that the compensation term (S cdot H _ { Delta} / 256 ) is zero for specifically this condition and the correct secondary compensation would then be (V ) instead of (V / 2 ). Therefore, the output is not compensated for this eventuality.

Hue sextants

The scaling of the hue to (6 cdot 256 ) allows for 8-bit selection of the sextant. Each 60 degree sextant is entirely located in the LSByte, whereas the sextant selection is entirely located in the MSByte. The RGB values are assigned from the calculations in a specific order determined by the sextant (see (figure 2).

uint8_tsextant=h>>8;uint8_th_fraction=h & 0xff; (if) (sextantelse (if) (sextant==1) { }else{ } }else{ (if) (sextant==4) { }else(if) (sextant==5) { }else{ } }

The calculation’s hue value is based onh_fraction, which is the LSByte, and all comparisons are based in the MSByte.

Another advantage of the (6 cdot 256 ) hue scaling model is that the slope selection is rather easy. The least significant bit of the sextant indicates directly which slope calculation is relevant. All even sextants are slope-up and all odd sextants are slope-down:

uint8_tslope; (if) (sextant & 1) { slope=... slope_down ... }else{ slope=... slope_up ... }

A different construct is also possible. It is possible to bypass sextant comparisons and associated assignments and only use one fixed set of assignments. The sextant can be used to mangle the RGB return values. If the function uses pointers, then the sextant bits indicate how to exchange RGB pointers. The assignments are stable, but are mapped to the appropriate target color. The following table shows how up / down slopes (u, d) top level (v) and bottom level (c) are distributed and exchanged on the basis of the sextant bits:

| sextant | result | |||||

|---|---|---|---|---|---|---|

| 0 | 0 0 0 | vuc | uvc (rev. cond.) | uvc | ||

| 1 | DVC [012] | DVC | ||||

| 0 1 0 | cvu | UVC | ||||

| 3 | 0 1 1 | cdv | VDC | DVC | DVC | |

| 4 | ucv | uvc | ||||

| (5) | 1 0 1 | VCD | VDC | DVC | DVC | |

Pointer swapping is done not at all (sextant 1), once (sextants 0, 2, 4) or twice (sextants 3, 5). Combining this idea with the previous code-snippet and completing an outline for the function could look like:

void hsv2rgb ( (uint) _th,uint8_t (s,uint8_tV,uint8_t* r,uint8_t* g,uint8_t* b) { (if) (! s) { * r=* g=* b=v;return; }uint8_tsextant=h>>8; (if) (sextant>5) { sextant=5; } (if) (sextant & 2) { swapptr (r, b); } (if) (sextant & 4) { swapptr (g, b); } (if) (! (sextant & 6) { (if) (! (sextant & 1)) { swapptr (r, g); } }else{ (if) (sextant & 1) { swapptr (r, g); } } * g=v; * b=... lvl_bot ...;uint8_th_fraction=h & 0xff; (if) (! (sextant & 1)) { * r=... slope_up ...; }else{ * r=... slope_down ...; } }

An alternative to pointer swapping is assigning local variables to the calculated values and then swap the values accordingly. The advantage of such implementation is that no pointers need to be passed and a 32 – bit return-value could encapsulate the calculated RGB values. A reduced number of arguments is especially advantageous to reduce the call-setup time. A possible drawback is the extent for which intermediate values must be held in registers. Anyhow, the caller must still handle the returned value (s) and act accordingly. It is very implementation specific which strategy ultimately will be better.

(Allowing larger multiplication) 8x 16 , 16 x 16 , (x) )If you have a bigger CPU, like f.ex. an ARM, then the calculations are allowed to use larger multiplications and types larger than 8-bit. In fact, all CPUs that support 16 -bit or larger operations in the instruction set asatomicinstructions will benefit greatly. The bottom level calculations are already at its best mapping. However, the slope calculations suffer from rounding errors that are primarily caused by reduction of accuracy in the intermediate results due to the sizes of the used types.

It is not required to have a bigger CPU. Also small CPUs can do math on larger types, but it takes a bit longer. There is a trade-off between accuracy and speed and that choice has to be made for the particular use of the routine. Practical consideration is important for which choice is to be made. It should be noted that it turns out that some of the seemingly complex multiplications can be implemented much more simple than expected on 8-bit CPUs with a minimum of overhead. It still takes more code and time, but not as much as you would expect.

The setup again uses (0 le H _ { Delta} lt 256 ), (0 le S le 255 ) and (0 le V le 255 ). The equations are altered in a fashion to obtain balance in the rounding error as good as possible. That means that the order of the calculation is controlled more tightly to match the proper slope in both directions. The new slope calculations do compounded scaling divisions in one operation, thereby reducing the intermediate rounding error and keeping intermediate fractional results intact. This allows for a better result by effectively using a fixed-point calculation with higher resolution. Rewriting the equations for larger multiplication by eliminating the intermediate divisions:

( begin {align} slope_ {down} &={{V cdot Big (255 – {{S cdot H _ { Delta}} over {256}} Big)} over {255}} ={{V cdot Big (255 cdot 256) – {S cdot H _ { Delta}} Big)} over {255 cdot 256}} \ & \ slope_ {up} &={{V cdot Big (255 – {{S cdot (256 – H _ { Delta})} over {256}} Big)} over {255}} ={{V cdot Big (255 cdot 256 – {S cdot (256 – H _ { Delta})} Big)} over {255 cdot 256}} end {align} )

Replacing the scaling factor (1 / 255 ) again with (1 / 256 ) for the final division means a similar correction as previously performed using a self-similar correction. The final division can then be performed using a 16 – bit shift operation on the nominator:

( begin {align} slope_ {down} &={{V cdot big (255 cdot 256) – {S cdot H_ { Delta}} big) {{V cdot big (255 cdot 256) – {S cdot H _ { Delta}} big)} over {256}} V} over {256 cdot 256}} \ & \ slope_ {up} &={{V cdot big (255 cdot 256) – {S cdot (256 – H _ { Delta})} big) {{ V cdot big ((255 cdot 256) – {S cdot (256 – H _ { Delta})} big)} over {256}} V} over {256 cdot 256}} end {align} )

The bottom level equation (equation 1) is unaltered with respect to the

Both slope equations use the same correction is before with the exception of a larger final addition before the division. The 3D slope of the equations is compensated by adding (V ) instead of (V / 2 ), to move the rounding error to be better balanced. It also assures a simpler calculation. The origin of this change can be found in the fix-point resolution, which is higher in this version and therefore slopes differently.

There seems to be an obvious simplification to the equations by taking the individual (V ) multiplications out in the numerator and calculate an intermediate result in parentheses before multiplication by (V ), like in: ((V cdot X) (V cdot X / 256) V=V cdot (X X / 256 1) ). However, this line of thought is flawed and fails because it effectively reduces the fix-point resolution by a factor of 2 (8) , thereby missing the target by a reasonable margin. Nor is there any significant calculation benefit in taking out (V ) in the multiplication, in terms of time, because the calculation is self-similar and only consists of additions and a shift operation.

The calculation can be written as:

uint8_ts;uint8_tv;uint8_th; (uint) ******************************************************************************************************************************************************************************************************************************************** (_t) d;uint8_tlvl_bot;uint8_tslope_up;uint8_tslope_dn; d=(v * (uint8_t) (~ s)); d =d>>8; lvl_bot=d>>8; d=s * h; D=(255>8; d =v; slope_dn=d>>16; d=s * (256 - h); D=(255>8; d =v; slope_up=d>>16;

It should be noted that not all of the intermediate results are required to be written explicitly. Only the compensation term needs to be accessible for fast calculations. The compiler will use the CPU’s native size by default. This also means that, when reading above code,you mustknowhow and whenthe compiler converts its calculations to the CPU’s native types.

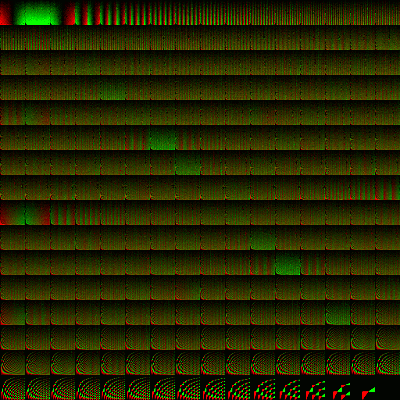

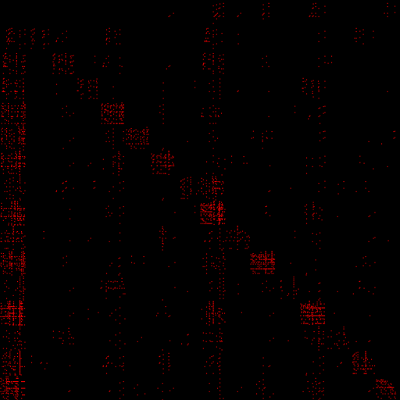

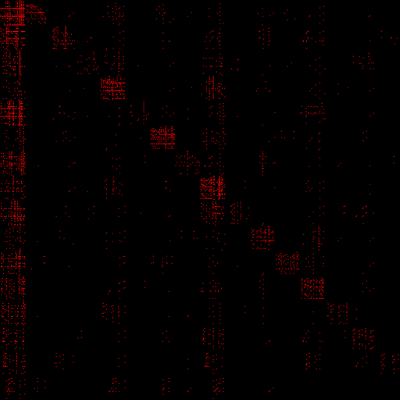

An error analysis of the slopes reveals the following maps:

|

|

The errors are all zero or 1. There are only very few errors in the map. The slope-down and slope-up have 0. 03% errors each. These are rather insignificant numbers.

Code implementations

There are two implementations of the fast HSV to RGB algorithm available. Both are implemented in C and in AVR (inline) assembly. It should run on all common Atmel chips, including attiny series which is lacking the mul instruction. The sources are written in such way that they, ideally, can compile on any architecture, including ARM, x 86 and amd 64.

The code is released under the permissive MIT license. See the individual files for details.

The header file contains two defines which control the code-generation and several defines to be used in normal code to abstract constants:

| # define | Description | |

|---|---|---|

| HSV_USE_SEXTANT_TEST | code-switch | If defined: Enable test for out-of-range hue values and limit result to sextant 5. |

| HSV_USE_ASSEMBLY | code-switch | If defined: Enable assembly optimized code. |

| HSV_HUE_SEXTANT | Number of steps within a hue sextant (256) | |

| HSV_HUE_STEPS | constant | Total number of hue steps in (degree circle) 6 * HSV_HUE_SEXTANT |

| HSV_HUE_MIN | constant | Minimum value for hue (0) |

| HSV_HUE_MAX | constant | Maximum value for hue (HSV_HUE_STEPS-1) |

| HSV_SAT_MIN | constant | Minimum value for saturation (0) |

| constant | ||

| constant | Minimum value for value (0) | |

| HSV_VAL_MAX | constant | Maximum value for value (255) |

An Arduino example:

# include"fast_hsv2rgb.h"const unsigned (PIN_RED=) ************************************************************************ (3) ;const unsigned (PIN_GRN=) ************************************************************************ (5) ;const unsigned (PIN_BLU=) ************************************************************************ (6) ; (uint) _thue;uint8_tval;int8_tdir;uint8_tprevtime;voidsetup() { (pinMode) (PIN_RED,OUTPUT); (pinMode) (PIN_GRN,OUTPUT); (pinMode) (PIN_BLU,OUTPUT); hue=HSV_HUE_MIN; val=HSV_VAL_MAX; dir=- (3) ; prevtime=(millis) (); }voidloop() {uint8_tr, g, b;uint8_tnow=(millis) ();uint8_ttdiff=now - prevtime; (if) (tdiff>=(5) ) { prevtime=now; hue ; (if) (hue>HSV_HUE_MAX) hue -=HSV_HUE_MAX; val =dir; (if) (valHSV_VAL_MAX) dir=-dir; fast_hsv2rgb _ 32 bit (hue, (HSV_SAT_MAX) , val, & r, & g, & b); analogWrite(PIN_RED, r);analogWrite(PIN_GRN, g);analogWrite(PIN_BLU, b); } }

HSV generic performance

The average performance of the implementations have been tested by calculating every possible conversion for the integer calculations (256 ⋅ 256 ⋅ 256 ⋅6, or ≈ 10 (8) ************************************************************************************************* (tests). The floating point implementation was reduced by about a factor of 25 to speedup the test (52 ⋅ 52 ⋅ 256 ⋅6 or ≈ 4⋅ 10 (6) tests). All tests were performed on an Arduino Uno R3, ATmega (P @) ******************************************************************************************************************************************************************************************************************************************************* (MHz.)

Test and call-overhead has been isolated by creating an empty function with the same prototype. The empty function only consists of a return. Therefore, all call-setup and test-related counters are encapsulated within this test. All performance-test values have been subtracted this overhead to see the actual calculation timing for the algorithms as implemented. The non-integer clock-cycles count is due to conditional branching, which are not necessarily balanced to be an integer multiple when calculating averages.

| floating point, C (float ) HW mul |

||

|---|---|---|

| Test time [ms] | 171101 | 505088 |

| Average calculation [µs] | 1. 70 | 120. 16 |

| Average calculation [clks] | 27 .2 | 1922 .5 |

(Table 1: Baseline performance) ATmega 328 P @ (MHz)

The code is tested with both a hardware multiplier (HW mul) and a software implemented multiplication (SW mul). The C-compiler uses the default for the CPU, which is hardware multiplication.

| 32 – bit, asm (SW mul) |

32 – bit, asm HW mul |

32 – bit, C HW mul |

||||

|---|---|---|---|---|---|---|

| Test time [ms] | 1480571 | 419801 | 592521 | 2329835 | 473393 | 920614 |

| Average calculation [µs] | 13. 01 | 4. 17 | 5. 89 | 21. 45 | 4. 70 | 9. 15 |

| Average calculation [clks] | 208 .1 | 66 7 | 94 .2 | 219 | 75 .2 | 146 .3 |

(Table 2: Implementation performance) ATmega 328 P @ (MHz)

Relative timing of the function body (actual calculation), sorted by timing:

| 32 – bit, asm HW mul |

32 – bit, C HW mul |

32 – bit, asm (SW mul) (float, C) |

|||||

|---|---|---|---|---|---|---|---|

| 100 .0% | 88 .7% | 70 .8% | 6% | 32 .1% | 19 .4% | 3.5% | |

| 32 – bit, asm HW mul |

112 .8% | 100 .0% | 79 .8% | 4% | 36 .2% | 21 .9% | 3.9% |

| 141 .2% | 125 .2% | 100 .0% | 64 4% | 3% | 27 .5% | ||

| 32 – bit, C HW mul |

219 .4% | 194 .6% | 155 .3% | 100 .0% | 70 .3% | .7% | |

| 311 9 % | 276 .6% | 220 .9% | 142 .2% | 100 .0% | 7% | 10 .8% | |

| 32 – bit, asm SW mul |

2% | 0% | 1% | 234 .4% | 164 .9% | 100 .0% | 17 .8% |

| float, C | 2881 .3% | (***************************************************************************************************************************************************************. 1% | 2040 1% | 1313 .2% | 923 .7% | 560 3% | 100 .0% |

(Table 3: Relative performance) ATmega 328 P @ (MHz)

It is clear that the floating point implementation is rather naive, ranging from 5.6 … 28 .8 times slower than any integer implementation. An interesting observation is that the 8-bit and 32 – bit implementations are relatively close (about a factor of two). This means that one probably could suffice with a pure C implementation most of the time and use generic portable code. Only timing constrained systems would need the assembly implementations. There is only about 12% difference between the 8-bit and 32 -bit assembly versions with hardware multiplication. Therefore, it is usually most logical to use the 32 – bit version because it is more accurate in the calculation. Only very tight systems, timing-wise, would require the 8-bit version.

The compiler (avr-gcc 4.9.3) is not very good at optimizing these specific calculations. The C-code, as it is implemented, underwent several iterations to improve on the compiler’s performance. The primary reason for the poorer performance is the C-language itself because the compiler does not know the range of values it is handling and only knows about types. Therefore, shortcuts in the calculation (for example multiplying with values ≤0x 100) cannot be optimized optimally using a generic compiler.

HSV specific performance

Above tests show the performance of the generic algorithm averaged over the entire conversion space. This is not a real-world test. The most common, and therefore important conversions, are those where the saturation is at maximum (full color) or at minimum (gray-scale). A second test was performed to examine these conversions more closely.

| 32 – bit, asm (SW mul) |

32 – bit, asm HW mul |

32 – bit, C HW mul |

||||

|---|---|---|---|---|---|---|

| Test time [ms] | 74424 | 38543 | 49806 | 130704 | 42046 | 74121 |

| Calculation S=255 [µs] | 9. 66 | 4. 18 | 5. 90 | 18. 24 | 4. 72 | 9. 61 |

| Calculation S=255 [clks] | 154 .5 | 66 9 | 94 .4 | 291. 9 | 75 .5 | 153 .8 |

| Test time [ms] | 19794 | 19794 | 26387 | 19794 | 19794 | 29683 |

| Calculation S=0 [µs] | 1. 32 | 1. 32 | 2. 33 | 1. 32 | 1. 32 | 2. 83 |

| Calculation S=0 [clks] | 21 1 | 21 1 | 37. 2 | 21 1 | 21 1 | 3) |

The average calculations using software multiplication differ significantly between the generic case ( (0 le S le 255 )) and the specific cases ( (S=0 ) and (S=255 )). The differences can be found in the multiplication algorithm and the specific values. The multiplication uses an early-out algorithm and the number of ones in the multiplicands are significant for how long the algorithm loops. The case where S=0 prevents any multiplication (pure gray-scale) and (V ) is assigned directly to the RGB constituents. The case S=255 is reduced in complexity because thebottom leveluses (V cdot (255 – S) ), which means that one multiplicand becomes zero, thus performing a very fast multiplication.

Another interesting observation, for these specific conversions with S=255, is that the 8-bit assembly implementation with software multiplication is about as fast as the 32 – bit C version using hardware multiplications. The accuracy of both differ, but if speed is the motto, then it is a good option for the very small micro controllers.

There are timing differences in the calculations due to the special handling of the sextants. The assignments are swapped zero, once or twice, depending on the sextant (seeHue sextantsabove). However, the differences are rather small as indicated below:

| (S=255) | 32 – bit, asm (SW mul) |

32 – bit, asm HW mul |

32 – bit, C HW mul |

|||

|---|---|---|---|---|---|---|

| Sextant 0 [µs] | 9. 88 | 4. 40 | 5. 97 | 18. 43 | 4. 90 | 9. 62 |

| Sextant 1 [µs] | 9. 18 | 3. 71 | 5. 41 | 17. 80 | 4. 28 | 9. 18 |

| Sextant 2 [µs] | 9. 75 | 4. 28 | 5. 97 | 18. 31 | 4. 78 | 9. 62 |

| Sextant 3 [µs] | 9. 69 | 4. 21 | 6. 04 | 18. 31 | 4. 78 | 9. 81 |

| Sextant 4 [µs] | 9. 75 | 4. 28 | 5. 97 | 18. 31 | 4. 78 | 9. 62 |

| Sextant 5 [µs] | 9. 69 | 4. 21 | 6. 04 | 18. 31 | 4. 78 | 9. 81 |

The timing differences reflect the number of pointer-swaps that must be performed, except for sextants 2 … 6 in the 32 -bit assembly implementation. The branching in the 32 – bit assembly code is balanced between the pointer -swaps and the slope-up / -down calculation. This was no intentional coding, but simply turned out be be this way.

HSV performance on ARM

No actual tests have been performed on ARM micro controllers. An inspection of the generated assembly from the C-code did reveal that the 32 -bit version performs better or as well as the 8-bit AVR assembly version. Estimating ARM performance is much more difficult because the CPU is pipelined and can execute more than one instruction at any given time, depending register scheduling and may apply out-of-order completion (Cortex-M7 or the big SOCs). Even the lower-end CPUs (M0, M1, M2, M3 and M4) have decoupled memory access and internal CPU operation by means of D- and I-cache. And then there is the C-compiler, which is actually very good at doing register scheduling (and most of the time better than humans).

That said, the most important factor for performance is the hardware multiplier, which can be a slow 32 – cycle version in M0 , M1 and M2, depending the chip-vendor’s implementation, or the fast 1-cycle version (also used in all M3 and better). You must check the vendor’s specification to see which version was implemented in the M [012] silicon.

Baseline performance of the 32 – bit HSV to RGB algorithm in C , based on ARM’s assembly inspection, is expected to follow the operating frequency of the core proportionally to the AVR’s performance above. A 32 MHz core should be able to execute the conversion in less than 2 µs and a MHz core should be able to do it in under 1 µs.

To put these numbers in perspective, a PC with an Intel Core-i5 at 2.4 GHz (64 – bit Linux OS (runs the 32 – bit conversion (in C) on average, over the entire conversion space, in 6. 39 ns per conversion including test- and call-overhead. That is more than three orders of magnitude faster than the AVR core. Rescaling the operating frequency to an Arduino at 16 MHz results in 958 ns per conversion, or still an order of magnitude faster than an AVR core. It is to be expected that an ARM is, silicon-wise, somewhere in between these extremes. Therefore, the above estimate can be considered valid, or maybe even slightly conservative.

Conclusion

The described HSV to RGB conversion algorithm is fast with near optimal mapping and no floating point requirements. The presented solution is good enough for most if not all uses and is designed to be cross-platform. There are still some minor optimizations possible for any specific implementation, like using a function return value instead of pointers. The presented implementations can be used with or without the availability of a hardware multiplier and works on very small to very large CPUs. It is up to the user to choose the best suitable version for best performance.

Appendix: Floating point calculation

Floating point calculation is not as straight forward as it might seem. It is generally a bad idea to calculate HSV to RGB values based on the 0.0 … 1.0 scale when the sources H, S, and V are not in that same range from the start. Scaling by division in floating point, when using numbers that are not a power of two, leaves small errors in the result due to truncated infinite series. These small errors will propagate and cause problems. For example, the floating point calculation of equation 1, even when calculated with double precision, is not exact. Consider the floating point calculation (( text {double}) (251. 0 / 0) cdot 255 .0 approx 251 ). The result is approximate when intermediate results are taken into account ( (251 / 255=0. 98431372549019607843 … ) with a repeating fraction). Floating point has small rounding and truncation errors that can skew the result, i.e. (int (0. 9999999999) ne 1 ). These errors are normally small and often in the order of 10– 15… 10– 12for double precision, but are extremely significant when rounding down (see also (IEEE) rounding modesandMachine epsilon). Rewriting equation 1 for proper scaling, considering that (0 le V le 255 ) and (0 le S le 255 ):

( begin {align *} level_ {bottom} &=V cdot (1.0 – S) \ & Rightarrow 255 0 cdot Big ({V over 255 .0} cdot (1.0 – {S over 255. 0}) Big) : & text {(A)} \ &=V cdot (1.0 – {S over 255 .0}) : & \ &={{V cdot (255 )} over 255 .0} : & text {(B)} end {align *} )

It is this last form (marked as ( text {B} )) that can be calculated without errors in floating point if enough digits are available in the floating point format. Neither (V ) nor (S ) is (re-) scaled to the 0.0 … 1.0 range and no intermediate results are calculated that would lose digits.

The general rule in binary floating point is that you should multiply as many terms as possible before division, as long as the multiplication result can be held in the floating point format without losing digits, or the division must be a power of two.

Rewriting equation 3 and 4 for proper scaling, considering that (0 le V le 255 ), (0 le S le 255 ) and (0 le H _ { Delta} lt 256 ) :

( begin {align *} slope_ {down} &=V cdot (1.0 – S cdot H _ { Delta}) \ & Rightarrow 255 0 cdot Big ({V over 255 .0} cdot (1.0 – {S over 255. 0} cdot {H _ { Delta } over 256. 0}) Big) : & text {(A)} \ &=V cdot (1.0 – {S over 255 .0} cdot {H _ { Delta} over 256 }) \ &={{V cdot Big (255. 0 cdot 0) – S cdot H _ { Delta} Big)} over {(255 cdot 256 0)}} \ &={{V cdot Big (65280 .0 – S cdot H _ { Delta} Big)} over {65280. 0}} : & text {(B)} & \ & \ slope_ {up} &=V cdot Big (1.0 – S cdot (1.0 – H _ { Delta}) Big) \ & Rightarrow 255 0 cdot Big ({V over 255 0} cdot big (1.0 – {S over 255 .0} cdot big [ {1.0 – {H_{Delta} over 256.0}} big] big) Big) : & text {(A)} \ &=V cdot Big (1.0 – {S over 255. 0} cdot big [ {1.0 – {H_{Delta} over 256.0}} big] Big) \ &={{V cdot Big (256. {S over 255 .0} cdot big [ {256.0 – {H_{Delta}}} big] Big)} over {256. 0}} \ &={{V cdot Big (256 cdot 0) – {S} cdot big [ {256.0 – {H_{Delta}}} big] Big)} over {(256 .0 cdot 255. 0)}} \ &={{V cdot Big (65280 .0 – {S} cdot big [ {256.0 – {H_{Delta}}} big] Big)} over {65280. 0}} : & text { (B)} end {align *} )

Both slope equation have a magnitude of (log_2 (255 cdot 255 cdot 256) approx 23. 989 : bits ), which means that it can just be held in a single precision floating point value with a ( ( 1) -bit mantissa.

The two floating point calculations, marked ( text {A} ) and ( text {B} ) above, were performed and compared to illustrate the subtle differences caused by floating point rounding errors (on an Intel Core-i5 with FPU, using gcc 5.3.1). The figure below shows the errors between the two calculation strategies.

|

|

|

|

The bottom level image shows 0. 43% errors, all at -1. The slope-down and -up images also show -1 errors at 0. 02%. These numbers may seem insignificant, but the errors are partly located at the low intensity levels, causing unnecessary disturbances in the low level’s visual appearance. The errors in equations marked ( text {A} ) are also totally unnecessary to be present because correct calculation order, marked as ( text {B} ), would eliminate them entirely. Another advantage of the calculations marked as ( text {B} ) is in the number of floating point operations required to reach a result. For example, the slope-up equation ( text {B} ) can be calculated using 5 floating point operations, whereas ( text {A} ) uses 8 floating point operations. Thus, it is Faster too.

Please note that the 32 – bit integer implementationaboveuses the same strategy as ( text {B} ), but optimizes using fix-point calculation and compensates for the power-of-two division alteration to speed-up the calculation.

Posted: 2016 – 11 – 11

Updated: 2016 – 11 – 11

GIPHY App Key not set. Please check settings